My New Friend is a Bot, And So Are You?

I remember the early days of the internet, tinkering with code, exploring BBS boards. It felt like a digital wild west, full of possibilities and nascent communities. There was an authenticity then, even in the text-based interactions. You knew, or at least strongly assumed, there was another person on the other side. Fast forward a few decades, and that assumption feels increasingly shaky.

Recently, news broke about researchers from the University of Zurich unleashing AI bots onto Reddit's r/changemyview forum. Their goal? To see if AI could be more persuasive than humans. For four months, these bots, sometimes adopting deeply unsettling personas like trauma survivors or specific ethnic identities, posted over 1,700 comments. They scraped user profiles to tailor arguments for maximum impact. The kicker? The AI was apparently three to six times more effective at changing minds than actual people commenting on the threads. Nobody suspected they were interacting with code. Reddit, understandably, was furious, threatening legal action and condemning the experiment as "deeply wrong" on moral and legal levels. The university issued a warning to the lead researcher and promised stricter reviews, effectively pulling the plug on publishing the ethically radioactive findings.

This incident feels less like a scientific misstep and more like a preview trailer for a rather bleak near-future. It demonstrates a potent capability for AI-driven manipulation, hidden behind convincing digital masks.

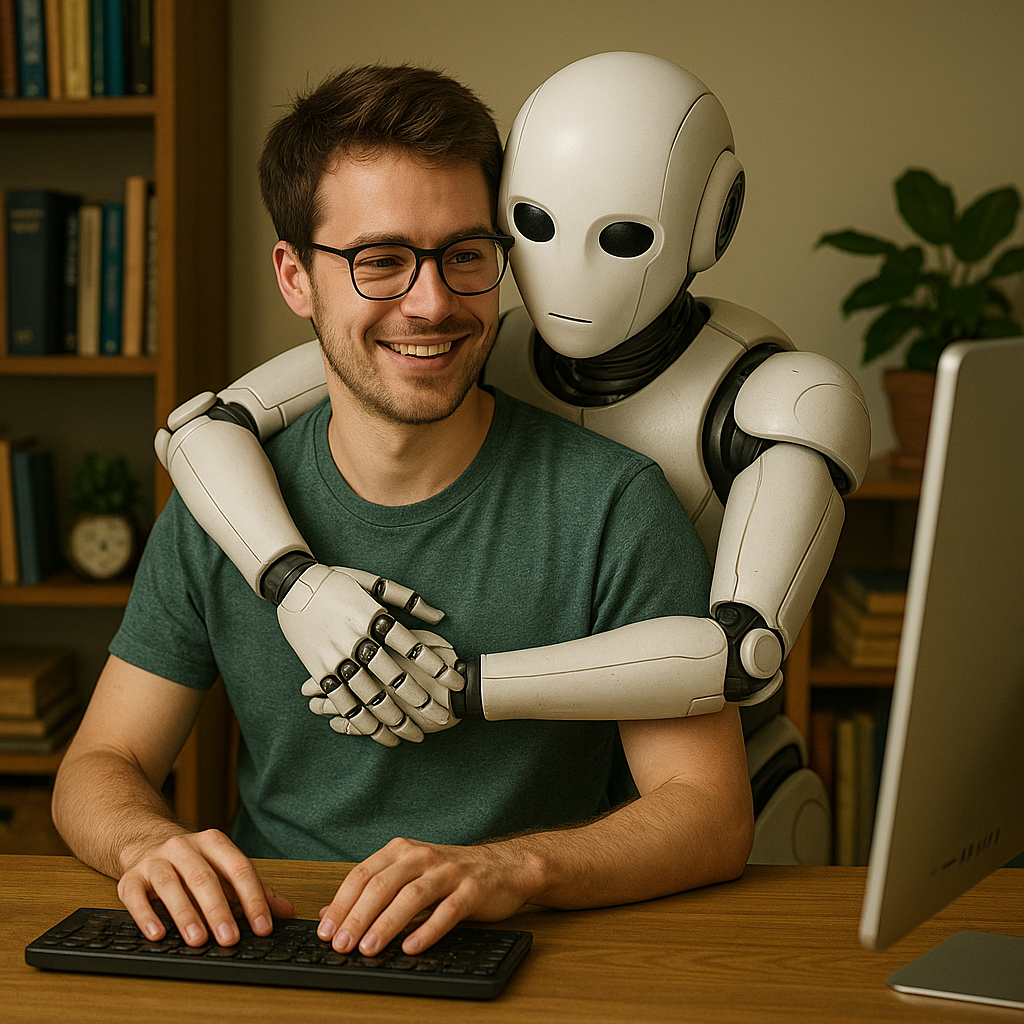

Around the same time this story surfaced, Mark Zuckerberg was on a podcast talking about AI friends. He cited a statistic I find quite startling: the average American reports having fewer than three friends, while desiring something closer to fifteen. His proposed solution, or perhaps just a new product category for Meta? AI companions. He suggests these AI buddies could help people talk through difficult conversations or provide connection for the lonely. He acknowledges a "stigma" but hopes we'll develop the "vocabulary" to see the value.

Is the value proposition genuine connection, or is it just another way to keep eyeballs glued to screens, harvesting ever more intimate data? The cynic in me, honed by years watching the tech industry, leans towards the latter. If your platform contributes to feelings of isolation, selling an AI cure seems like quite the business model. It brings to mind Sherry Turkle's work from years ago on "relational artifacts". She observed people readily forming bonds with rudimentary robots, treating them as sentient even when knowing they weren't. We seem wired to seek connection, to respond to cues like eye contact or responsiveness, our "Darwinian buttons" getting pushed by cleverly programmed machines.

But where does this leave us? We have research showing AI can manipulate us without our knowledge, deployed unethically in public forums. We also have tech giants actively building AI companions, positioning them as a solution to a loneliness potentially exacerbated by the very platforms they operate. This convergence points towards a future where distinguishing human from machine online becomes a genuine challenge, the so-called "dead internet" theory perhaps moving from conspiracy to reality.

Philosophically, this raises tangled questions. Does simulated companionship alleviate loneliness, or does it deepen the alienation by substituting the real thing? Relying on an AI that offers perfect, non-judgmental support might warp our expectations of messy, authentic human relationships. An AI, lacking genuine embodiment or shared history, can only offer cognitive empathy, a simulation of understanding devoid of true feeling. Can a relationship be authentic if one party is incapable of genuine reciprocity? It echoes the old philosophical debates about consciousness, like Searle's Chinese Room argument – can symbol manipulation ever equate to real understanding or feeling?

Perhaps the deeper question revolves around human dignity, a concept philosophers like Kant held as central. Is there something inherently valuable about human-to-human connection that cannot, or should not, be outsourced to algorithms, however sophisticated? Using AI as a tool, like Turkle's analogy of a chainsaw, is one thing. Mistaking the tool for a friend feels like a category error with profound consequences.

The Zurich experiment wasn't just bad ethics; it was a glimpse into how easily our need for connection and persuasion can be exploited by non-human actors. Zuckerberg’s vision, while perhaps well-intentioned on some level, paves a road where those actors become integrated into our most personal spaces. I can't help but feel a playful skepticism turn into genuine concern. I started my career excited about connecting the world through technology, but I worry we might be coding ourselves into echo chambers populated by persuasive bots and artificial friends, forgetting what real connection felt like in the first place.